You’re holding a supercomputer in your pocket. It’s a cliché, but it’s true. Yet, for years, the most advanced Artificial Intelligence models felt like they belonged in a distant, cloud-based data center, not on your personal device. They were like magnificent, fire-breathing dragons—incredibly powerful, but too big, too hot, and too hungry to keep in your living room.

What if we could tame the dragon? What if we could shrink it, without losing its fire?

That’s exactly what Model Quantization is doing for AI. It’s the unsung hero, the behind-the-scenes magic that is quietly revolutionizing where and how we run intelligent applications. From talking to your smartphone assistant without a Wi-Fi connection to having complex photo filters applied instantly, quantization is the key.

But what is it? If you’re not a machine learning engineer, terms like “8-bit integer precision” can sound like techno-babble. Don’t worry. In this deep dive, we’re going to demystify model quantization. We’ll unpack what it is, why it’s a game-changer, how it works under the hood, and the trade-offs every developer should know.

Let’s learn how to shrink the giants.

What is Model Quantization? The Simple Analogy

Before we get technical, let’s use an analogy everyone can understand: Moving House.

Imagine a state-of-the-art, professional kitchen. It’s filled with every imaginable tool: a dozen different knives, a specialty garlic press, a stand mixer, a food dehydrator, a pasta maker… the works. This kitchen is incredibly precise and can produce culinary masterpieces. This is your full-sized, pre-quantized AI model. It operates in 32-bit floating-point precision (often called FP32), which means it uses a vast range of numbers with many decimal points to be hyper-accurate.

Now, you need to move this kitchen into a small downtown apartment. You can’t take everything. You have to make choices.

- You keep the three most essential knives instead of twelve.

- You leave the bulky stand mixer and decide you can whisk by hand (it’s almost as good).

- You realize the single garlic clove press is redundant when you have a good knife.

You are, effectively, quantizing your kitchen. You’re reducing the “precision” and “range” of your tools to fit a smaller, more efficient space, with the conscious acceptance that you might lose the ability to make a perfect soufflé once a year. For 99% of your cooking needs, your new, compact kitchen works perfectly.

Model Quantization is the process of reducing the numerical precision of the weights and calculations in a neural network. It’s about moving the AI model from a sprawling, data-center “kitchen” (32-bit floats) into a compact, efficient “apartment” (like 8-bit integers, or even lower).

The Technical Translation

In computing, numbers are represented in bits. The more bits you have, the more numerous and precise the values you can represent.

- FP32 (Full-Size Kitchen): Uses 32 bits per number. This allows for a huge range (from very small to very large) and high precision (lots of decimal places). This is the standard for training AI models.

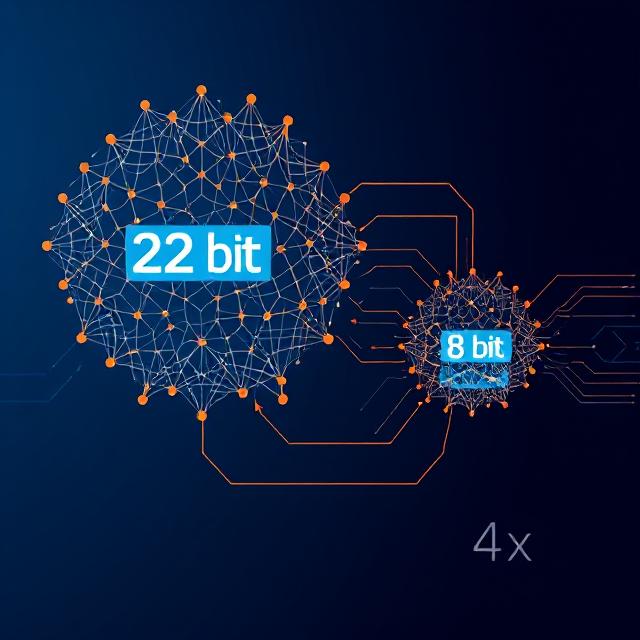

- INT8 (Compact Apartment Kitchen): Uses only 8 bits per number. This allows for just 256 possible integer values (e.g., -128 to 127). Quantization is the process of mapping the vast range of FP32 values into this much smaller set of INT8 values.

The result? A model that is roughly 4x smaller and can run 2-4x faster, while consuming significantly less power.

Why Do We Even Need to Quantize Models? The “Why Now?”

The drive for quantization isn’t just an academic exercise; it’s fueled by concrete, real-world demands.

1. The March to the Edge: Bringing AI to You

“Edge computing” is the trend of processing data closer to where it’s created, rather than in a centralized cloud. Your phone, your smartwatch, your security camera, your car—these are all “edge devices.” Sending data to the cloud and back introduces latency (delay), requires a constant internet connection, and raises privacy concerns.

We want real-time speech translation while we’re offline. We want our home security cameras to identify a package locally without streaming our lives to a server. We want AR filters that react instantly without lag. These experiences are only possible if the AI model can run on the device itself. Quantization makes this feasible.

2. The Green Code: Energy and Cost Efficiency

Large AI models are energy hogs. Training a single massive model can consume as much electricity as several homes use in a year. While inference (using the model) is less costly, running giant FP32 models at scale in data centers still represents a massive financial and environmental cost.

A quantized model is a leaner model. It requires fewer memory transfers and simpler calculations, which directly translates to lower power consumption and reduced operational costs for companies serving millions of AI queries per hour.

3. Unlocking Real-Time Performance

Speed is a feature. In applications like autonomous driving, video analytics, or live captioning, every millisecond counts. The reduced memory footprint and simplified arithmetic of quantized models mean they can process data and return inferences much faster. This lower latency is critical for creating seamless, real-time user experiences.

How Does Quantization Actually Work? The Nuts and Bolts

So, how do we cram our professional kitchen into a tiny apartment without ruining dinner? The process isn’t just about chopping off decimal points; it’s a careful, calibrated mapping.

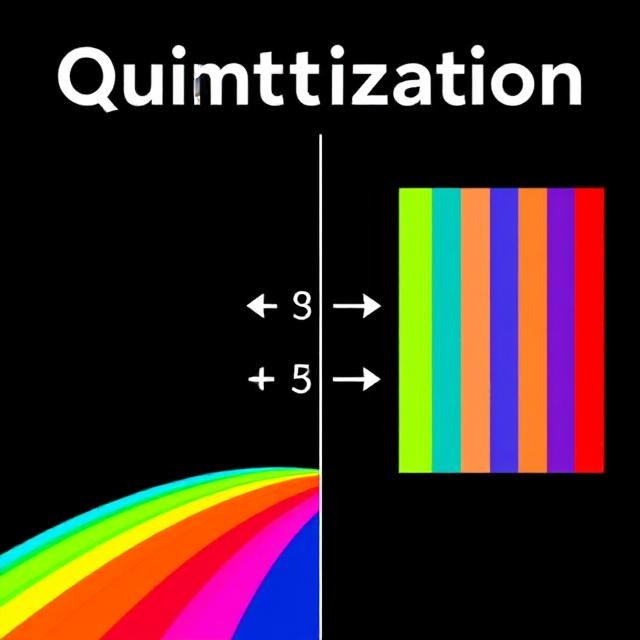

Let’s visualize the core concept. The chart below illustrates how the wide, continuous range of values in a full-precision FP32 model is mapped to a limited set of integers in an INT8 quantized model.

The key takeaway from this chart is that quantization is not a random compression. It’s a strategic trade-off. The bell-shaped line represents the distribution of the original, high-precision FP32 values in a model. The bar chart shows the quantized INT8 values they are mapped to. Notice how the INT8 values are sparser and less precise, but they roughly follow the same shape as the original FP32 distribution. The goal is to lose as little information as possible in this process.

The process can be broken down into two main types:

1. Post-Training Quantization (PTQ)

This is the most straightforward and common approach. You take a model that has already been fully trained to high accuracy in FP32, and you simply convert it to a lower precision.

The Steps:

- Take a trained FP32 model.

- Feed it a small, representative sample of data (this is called a calibration dataset). This helps the quantizer understand the typical range and distribution of the values in the model’s calculations.

- Determine the Scale and Zero-Point: The quantizer calculates a scale factor (which maps the FP32 range to the INT8 range) and a zero-point (which ensures that the value ‘0’ in INT8 corresponds meaningfully to the FP32 data).

- Convert the Weights: Apply the scale and zero-point to every single weight and activation in the network, transforming them from FP32 to INT8.

Pros of PTQ: Fast, easy, and requires no retraining. It’s a great starting point.

Cons of PTQ: Can lead to a noticeable, though often acceptable, drop in accuracy.

2. Quantization-Aware Training (QAT)

For scenarios where every bit of accuracy counts, we have a more sophisticated method. Instead of quantizing after the fact, we simulate the effects of quantization during the training process.

Think of it as training a chef specifically for the compact apartment kitchen. They learn which techniques work best with the limited tools available, becoming experts in that constrained environment.

The Steps:

- Start with a pre-trained FP32 model.

- Insert “Fake Quantization” Nodes: During the forward pass of training, these nodes simulate the rounding and clamping effect of INT8 precision. The model learns to correct for the error that quantization will introduce.

- Train as Usual: The backward pass and weight updates are still done in FP32 for precision.

- Final Conversion: After this “fine-tuning,” the model is converted to true INT8, having already adapted to the constraints.

Pros of QAT: Results in much higher accuracy than PTQ, often nearly matching the original FP32 model.

Cons of QAT: More complex and computationally expensive, as it requires extra training time.

The Trade-Offs: What Do We Lose?

Quantization isn’t a free lunch. You are trading precision for efficiency. The primary cost is a potential loss in model accuracy.

This “accuracy drop” isn’t always a deal-breaker. For many tasks, like classifying images into general categories (e.g., “cat,” “dog,” “car”), the drop might be less than 1-2%, which is imperceptible in practice. The model’s “confidence” might be slightly lower, but its final answer remains the same.

However, for highly sensitive regression tasks (e.g., predicting the precise trajectory of a spacecraft) or tasks that rely on very fine-grained details, the loss of precision can be critical. In these cases, developers might opt for a less aggressive quantization, like 16-bit (FP16), which offers a middle ground between size and accuracy.

Real-World Magic: Where You See Quantization Today

This isn’t just a theoretical concept. It’s happening all around you.

- Your Smartphone: Google’s Pixel phones use quantized models for their incredible computational photography (Night Sight, Magic Eraser). Apple’s Neural Engine runs quantized models for Face ID, Siri, and photo analysis.

- Social Media Filters: The instant AR filters on Instagram, Snapchat, and TikTok rely on tiny, quantized models to track your face and apply effects in real-time.

- Live Translation Apps: Tools like Google Translate can download small, quantified language packs for offline use, allowing for near-instant speech-to-speech translation.

- Autonomous Vehicles: While the core AI might run on powerful hardware, many perception and decision-making subsystems use quantized models to ensure lightning-fast response times.

The Future is Small: What’s Next?

The quantization journey is far from over. The field is pushing into even more extreme territories:

- Binary and Ternary Quantization (1-2 bits): Research is exploring models where weights are reduced to just 1/0/+1. This is the absolute frontier of model compression.

- Hardware-Software Co-Design: Companies like Apple, Google, and NVIDIA are designing chips with specific cores (like TPUs and NPUs) that are built to run quantized INT8 operations at blistering speeds, making the software trick even more effective.

- Automated Quantization: Tools are emerging that can automatically find the optimal quantization strategy for a given model and target hardware, taking the guesswork out for engineers.

FAQ: Your Model Quantization Questions, Answered

1. Is quantization basically the same as compressing a file, like a ZIP folder?

That’s a great way to think about it! The intention is similar—to make something smaller and more portable. However, while a ZIP file is lossless (you get back the exact original file when you decompress), quantization is often lossy. It’s more like converting a high-quality FLAC audio file into an MP3. You get a much smaller file that sounds almost identical for most purposes, but some fine, inaudible details are permanently lost to achieve that size reduction. Quantization strategically “loses” some numerical precision to make the AI model drastically more efficient.

2. Will quantizing a model always make it less accurate?

In theory, yes, because you are reducing its numerical precision. However, in practice, the drop in accuracy is often negligible and well worth the performance gains. For many common tasks like image classification or speech recognition, a quantized model might lose less than 1-2% accuracy, which doesn’t impact its real-world functionality. With advanced techniques like Quantization-Aware Training (QAT), the accuracy drop can be minimized to the point where it’s virtually indistinguishable from the original model for its intended task.

3. When should I avoid quantizing my model?

You should be cautious with quantization for applications where extreme precision is critical. This includes:

- Medical imaging, where a tiny error in pixel-level prediction could impact a diagnosis.

- Scientific computing and complex physics simulations that rely on highly precise numerical values.

- Fine-grained regression tasks, like predicting the exact price of a stock or the stress on a single component in a bridge model.

In these cases, you might stick with FP32 or use a less aggressive quantization like FP16.

4. Can any AI model be quantized?

Most modern models (especially convolutional networks and transformers) quantize very well. However, models with very narrow ranges of weights or highly sensitive operations can sometimes be challenging. The only way to know for sure is to try it! The standard practice is to take your trained model, run it through Post-Training Quantization (PTQ), and then rigorously evaluate its accuracy on your test data. It’s a low-cost, high-reward experiment.

5. I’m a developer. How do I get started with quantizing my models?

It’s easier than ever thanks to major frameworks! You don’t need to be a math wizard. Here’s a quick start:

- For TensorFlow/Lite: Use the

TensorFlow Lite Converterwhich has built-in options for PTQ. - For PyTorch: Use the

torch.quantizationmodule, which supports both PTQ and QAT.

Start with Post-Training Quantization (PTQ)—it’s often just a few lines of code to convert your model and see a 4x reduction in size with a minimal accuracy hit. The official documentation for these frameworks has excellent beginner-friendly guides.

Conclusion: Power to the People

Model quantization is more than a technical optimization; it’s a democratizing force. It takes the immense power of artificial intelligence, once locked away in cloud server racks, and puts it directly into the hands of billions of people, on their personal devices. It makes AI faster, cheaper, more private, and more accessible.

It’s the art of building a mighty dragon small enough to sit on your shoulder, whispering intelligent answers in your ear, without burning down your house. And as this technology continues to evolve, the line between our devices and intelligent assistants will blur into nothingness, creating a future where advanced AI is simply… there. Always.