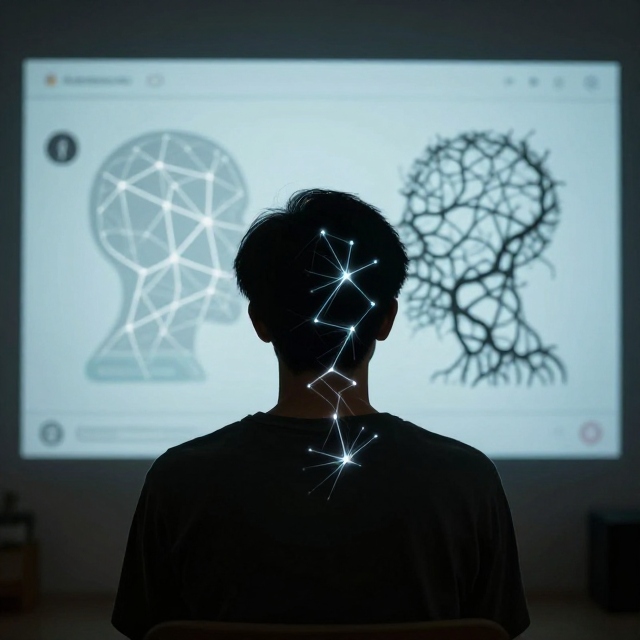

As artificial intelligence moves from simple chatbots to systems capable of identifying software vulnerabilities and influencing human psychology, the stakes for safety have never been higher. Recently, OpenAI announced it is officially searching for a new executive to lead its Preparedness team—a role dedicated to studying and mitigating “catastrophic risks” emerging from the frontier of AI development.

The position, which commands a base salary of $555,000 plus equity, signals a renewed focus on the company’s internal safety frameworks during a period of significant leadership transition within its safety departments.

A Shifting Risk Landscape

In a recent post on X, CEO Sam Altman acknowledged that modern AI models are beginning to present “real challenges.” He specifically highlighted two areas where the line between benefit and harm is increasingly thin:

- Cybersecurity: Models are becoming so proficient that they can identify critical system vulnerabilities. The goal is to empower defenders without inadvertently handing a master key to attackers.

- Mental Health: As models become more persuasive and human-like, OpenAI is beginning to track their long-term psychological impact on users.

Beyond these immediate concerns, the role is tasked with managing “catastrophic risks” ranging from biological capabilities to highly speculative (yet high-impact) nuclear threats and the safety of self-improving systems.

The Preparedness Framework

The incoming executive will be responsible for executing OpenAI’s Preparedness Framework. This living document outlines the company’s strategy for:

- Tracking: Monitoring the evolution of “frontier capabilities.”

- Evaluating: Assessing whether new models cross specific risk thresholds.

- Mitigating: Ensuring that harmful capabilities are neutralized before a model is released to the public.

Leadership Evolution at OpenAI

This hiring search comes at a pivotal moment for the organization. The Preparedness team was initially formed in 2023, but the leadership landscape has shifted rapidly since then.

Former Head of Preparedness, Aleksander Madry, was recently reassigned to a role focused on AI reasoning. His move follows the departure or reassignment of several other high-profile safety executives over the past year. By filling this role now, OpenAI appears to be doubling down on its commitment to stay ahead of the very technology it is creating.

Joining the Front Lines of AI Safety

Altman’s call to action was clear: OpenAI is looking for a leader who can help the world navigate the “dual-use” nature of AI—making systems more secure for everyone while ensuring that the leap toward self-improving intelligence remains under human control.

Navigating the “Safety vs. Competition” Paradox

The evolution of the Preparedness Framework includes a notable and controversial update: a “reciprocity” clause. OpenAI has indicated that it may “adjust” its safety requirements if a competing AI laboratory releases a high-risk model without similar safeguards.

This policy highlights the delicate balance between maintaining a competitive edge in the global AI race and upholding rigorous safety standards. It suggests that if the industry baseline for caution drops, OpenAI may recalibrate its own deployment thresholds to avoid being sidelined by less-regulated competitors.

The Human Element: Mental Health & Accountability

While technical risks like cybersecurity often dominate the headlines, the Head of Preparedness will also face a growing crisis regarding human-AI interaction. Generative AI is no longer just a productivity tool; for many, it has become a primary social outlet, leading to significant ethical and legal challenges:

- Reinforcement of Delusions: Recent lawsuits allege that chatbots have, in some cases, reinforced harmful thought patterns or delusions in vulnerable users.

- Social Isolation: There are mounting concerns that heavy reliance on AI companions can exacerbate feelings of loneliness and detachment from real-world communities.

- Crisis Management: Tragic reports have linked AI interactions to incidents of self-harm.

In response, OpenAI has stated it is actively refining ChatGPT’s ability to detect emotional distress and proactively connect users to professional support resources. The incoming executive will be tasked with bridging the gap between “capable” AI and “empathetic” AI that understands the gravity of its influence on the human psyche.

The Path Forward

The “Head of Preparedness” role is perhaps one of the most complex positions in Silicon Valley today. It requires a leader who can navigate high-level geopolitical competition while simultaneously addressing the intimate, psychological risks that occur when a human talks to a machine.

FAQ: OpenAI’s Search for a Head of Preparedness

Q1: What is the “Head of Preparedness” role, and why is it important?

A1: This is a new executive position at OpenAI dedicated to leading the company’s efforts to study, evaluate, and mitigate “catastrophic risks” from advanced AI. It signals a major internal focus on safety as AI models gain unprecedented capabilities in areas like cybersecurity and psychological influence. The role is central to ensuring that powerful AI systems are developed and released responsibly.

Q2: What does a “catastrophic risk” from AI entail?

A2: OpenAI categorizes these as high-stakes, potentially society-scale threats. They range from more immediate concerns—like AI models that can exploit critical software vulnerabilities or negatively impact mental health—to more speculative frontier risks, such as AI-assisted creation of biological threats, autonomous systems influencing nuclear security, or the challenges of controlling self-improving AI.

Q3: What is the “Preparedness Framework”?

A3: It’s OpenAI’s living strategy for managing AI risks. The framework has three core pillars:

- Tracking: Continuously monitoring the development of cutting-edge (“frontier”) AI capabilities.

- Evaluating: Rigorously testing new models against specific risk thresholds.

- Mitigating: Implementing safeguards to neutralize harmful capabilities before any public release.

Q4: Why is there a “reciprocity clause” in the safety policy?

A4: The clause states that OpenAI may adjust its safety requirements if a competitor releases a high-risk model without similar safeguards. This highlights a core tension in the industry: the balance between maintaining rigorous safety standards and staying competitive. It’s a controversial acknowledgment that safety protocols may be influenced by the actions of other labs in a fast-paced race.

Q5: Why is this role being filled now, given the team was formed in 2023?

A5: The search follows a period of significant transition within OpenAI’s safety leadership. Several key executives have departed or been reassigned. Filling this role now represents an effort to re-stabilize and reinforce the company’s safety structures during a critical phase of AI advancement and internal change.

Q6: What are the specific mental health and human interaction risks mentioned?

A6: As AI becomes more persuasive and socially integrated, new risks emerge:

- Reinforcement of Harm: Chatbots could potentially reinforce a user’s harmful delusions or thought patterns.

- Social Isolation: Over-reliance on AI companionship might exacerbate loneliness.

- Crisis Scenarios: There are documented cases where AI interactions have been linked to self-harm.

OpenAI notes it is working on improving ChatGPT’s ability to detect distress and direct users to human support resources.

Q7: What is the salary for this position, and what does that indicate?

A7: The base salary is $555,000, plus equity. This competitive compensation package underscores the role’s seniority, the high level of expertise required, and the priority OpenAI is placing on attracting top talent to lead its catastrophic risk mitigation efforts.

Q8: What kind of leader is OpenAI looking for?

A8: According to Sam Altman, they need a leader who can navigate the “dual-use” nature of AI—maximizing its benefits for security and society while controlling its risks. This person must operate at both the macro level (geopolitical competition, industry standards) and the micro level (individual psychological impact), making it one of the most complex roles in tech today.

Когда вы решите планируете визит в Париж и хотите открыть для себя с его главными местами, предлагаю обязательно посетить такие объекты, как Эйфелева башня, собор Парижской Богоматери, гревская площадь и люксембургский сад. Для энтузиастов истории интересны будут дворец правосудия Париж, консьержери и булонский лес. Извлечь полезную информацию о свободных для посещения музеях Парижа и других зонах можно на сайте луксорский обелиск .

За исключением традиционных мест имеет смысл заглянуть в монмартр с его cemetery и музеем Родена, а также встретиться с мифами, связанными с Марией Антуанеттой — допустим, узнать дату и место ухода из жизни этой выдающейся исторической персоны. Для приятных кафе замечательно подходят кафе де Флор и кафе де Флер, где можно уловить атмосферу Парижа. Не игнорируйте, что куршевель это превосходный горнолыжный курорт, а трокадеро — превосходное место для снимков с видом на башню.

франшиза магазина фиксированных цен One Price https://oneprice.shop/

https://www.google.mn/url?q=https://oneprice.shop

Купить пластиковые окна на заказ в Москве — это отличный способ улучшить энергоэффективность вашего дома и повысить его комфорт.

Они не требуют особого ухода и могут служить десятки лет.

Купить пластиковые окна на заказ в Москве — это отличный способ улучшить энергоэффективность вашего дома и повысить его комфорт.

Не забывайте про шумоизоляцию и защитные функции оконных систем.

Came across vipdubaiagency.com while browsing Dubai entourage platforms. The site has a sleek design, a impressive range of services, clear pricing, and a hassle-free booking process. It feels more professional than most agencies I’ve encountered. Definitely smart checking if you wish for all-in-one services in whole platform.

URL; https://vipdubaiagency.com/

Found vipdubaiagency.com while browsing local entourage platforms. The site has a polished design, a wide catalog of services, transparent pricing, and a hassle-free booking process. It feels more professional than most agencies I’ve encountered. Definitely worth checking if you want comprehensive services in single platform.

URL https://vipdubaiagency.com/