You’ve presumably been there. You class a advisement into ChatGPT” Write a abdication letter that is professional but also hints that my master is a micromanager” and within seconds, an impeccable draft appears. Or perhaps you’ve used an AI tool to communicate blog motifs, write a product description, or indeed induce a lyric about a lovesick robot.

The results can feel nothing short of magical. It’s easy to imagine a bitsy, conscious author living inside your computer, courteously casting each judgment.

But the verity is both more complex and more fascinating. Generative AI does not” suppose” or” understand” in the mortal sense. So, how does it work? How can a machine string words together with similar consonance and creativity?

Snare a coffee, and let’s pull back the curtain. We are going to break down the process of how generative AI generates a textbook, step by step. No computer wisdom degree needed.

The Foundation It’s All About Being a Super-Powered Guesser

At its heart, a generative AI model like GPT-4 or Google’s Gemini is a prophetic machine. Suppose it is the world’s most sophisticated autocomplete.

When you’re texting a friend and your phone suggests the coming word, it’s making a simple vaticination grounded on the last many words you compartmented. Generative AI does the same thing, but on a scale so vast it’s nearly incomprehensible.

It does not know what a” cat” is, but it knows with inconceivable delicacy that the word” cat” is veritably likely to appear after expressions like” the curious” or” antipathetic to.” It’s a probability machine for language. Give it a sequence of words, and it calculates the statistical liability of every word in its million-word vocabulary being the coming bone

in line.

This core conception is known as a” Language Model.” Its entire purpose is to model the patterns and structures of mortal language.

The Brain Behind the Brawn: The Transformer Architecture

Still, the Transformer is the brilliant brain that made it all possible, if the language model is the conception. Introduced by Google in 2017 in a now-fabulous paper called” Attention Is All You Need,” the Transformer architecture was the advance that drove off the generative AI revolution.

So, what makes it so special?

Before Mills, AI models reused textbook one word at a time, in order. To understand the meaning of the first word in a paragraph, they had to work their way through the entire judgment. This was slow and hamstrung, and the environment frequently got lost.

The Transformer changed the game with a medium called” tone- attention.”

A Simple Analogy: The Master Chef

Imagine a master cook preparing a complex stew. Apre-Transformer AI would taste each component one by one — first the swab, then the pepper, and also the beef. It would struggle to understand how they all relate.

The Transformer, still, is like a cook who can incontinently taste the entire pot at formerly. It can be understood that the word” it” in the judgment ” The cat chased the mouse because it was empty” most likely refers to the” cat.” It can grasp the connection between” The company invested in new tackle. Their old waiters were notoriously unreliable,” indeed, though the words are far piecemeal.

This capability to see and weigh the significance of every word in a judgment ( or indeed an entire document) contemporaneously is the secret sauce. It’s what allows ultramodern AIs to maintain the environment, handle complex alphabet, and produce a textbook that feels coherent and applicable over long passages.

The Training Process: How AI Learns the Art of Language

An invigorated Motor brain is a blank slate. It does not know any words, rules, or data. To come an important tool we interact with, it must go to the academy. And its academy is the entire internet.

This literacy process happens in two main phases

Phase 1: The Data Diet( A Library of Everything)

The model is trained on a colossal, nearly inconceivable, dataset of textbooks. We’re talking about terabytes of data scraped from books, Wikipedia, newspapers, scientific papers, law depositories, and websites. This is its text — a comprehensive sampling of mortal knowledge and communication.

Phase 2 The Learning Game( Fill- in- the-Blank on Steroids)

How does it learn from this mountain of textbooks? Through a simple yet important game, Fill-in-the-Blank.

The training process involves showing the model a judgment with a word missing. For illustration

” The sat on the mat and licked its paw.”

The model’s job is to predict the missing word. It makes a guess, and if it’s wrong (e.g., it guesses “car”), it gets a subtle adjustment—a digital nudge—to its internal settings. If it’s right (“cat”), it gets a positive reinforcement.

Now, imagine this process repeated not a thousand, not a million, but trillions of times. The model starts with simple words, then phrases, then entire clauses. Through this endless game, it internalizes the rules of grammar (“a” vs. “an”), common facts (“Paris is the capital of France”), storytelling structures, writing styles, and even reasoning patterns.

It’s not memorizing sentences; it’s learning the deep, statistical blueprint of how we communicate.

The Text Generation Process: From Your Prompt to a Finished Paragraph

This is the moment of truth. You’ve typed your prompt and hit enter. What happens inside the machine? Let’s follow the journey step by step.

Step 1: You Provide the Prompt (The Seed)

Your input—”Explain quantum physics like I’m 10 years old”—is the starting pistol. It sets the context, the tone, and the goal for everything that follows.

Step 2: Tokenization: Chopping Up the Input

The AI doesn’t perceive words in the same way we do. It sees tokens. A token can be a whole word, a part of a word (like “ing” or “un”), or even a single character. The sentence “Let’s play!” might be broken into the tokens: [“Let”, “‘”, “s”, ” play”, “!”].

This process makes it easier for the model to handle a massive vocabulary and complex words.

Step 3: Analysis & Probability Calculation

Now, the Transformer engine roars to life. It takes your tokenized prompt and, using its self-attention mechanism, analyzes the entire sequence. It then accesses everything it learned during its training to calculate a probability score for every single token in its vocabulary.

For our “quantum physics” prompt, tokens like “Okay,” “So,” or “Imagine” might have very high probabilities for the first word. Tokens like “zebra” or “carburetor” would have probabilities effectively at zero.

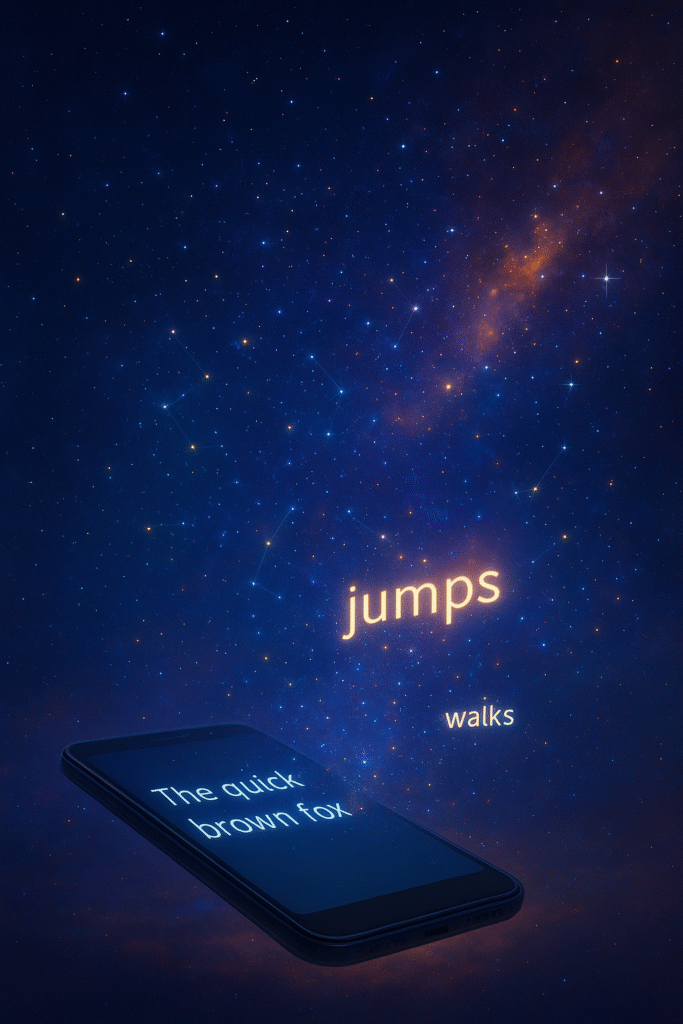

Step 4: The Choice: Picking the Next Word

This is where the art meets the science. The model doesn’t always pick the single most probable word. If it did, its writing would be incredibly boring, predictable, and repetitive.

Instead, it uses a sampling method, often “nucleus sampling” (top-p). This means it takes all the likely candidates—the top tier of probable tokens—and randomly selects one from that group.

- Always picking the #1 candidate (Greedy Sampling): “The cat sat on the mat and it was very…” -> “soft” -> “and” -> “the” -> “cat” -> …

- Choosing from a pool of good candidates (Top-p Sampling): “The cat sat on the mat and it was very…” -> [“soft” (40%), “comfortable” (35%), “warm” (25%)] -> randomly picks “warm” -> “from the sunbeam” -> …

This element of controlled randomness is crucial for creativity, variety, and surprise. It’s why you can get a different output each time you regenerate a response.

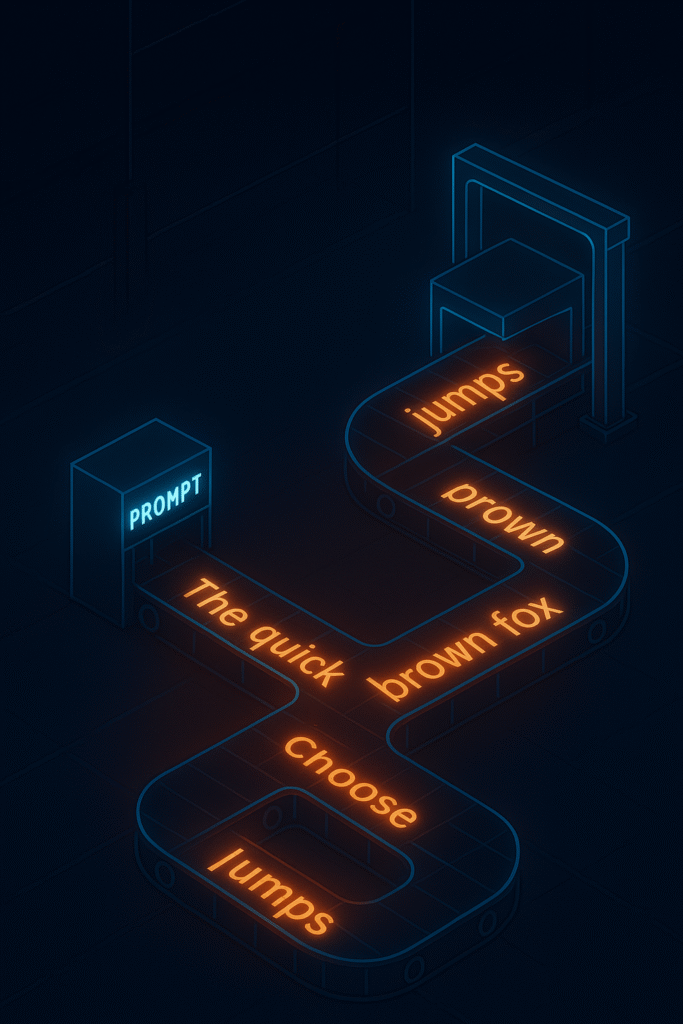

Step 5: The Loop Begins (The “Auto” in Autocomplete)

The selected token (“warm”) is added to the original prompt. The input is now: “Explain quantum physics like I’m 10 years old. Warm”. The process repeats:

- The entire new sequence is analyzed.

- Probabilities for the next token are calculated. (“from” might now be very likely after “warm”).

- A new token is chosen from the top candidates.

- It’s added to the sequence.

This loop continues, with each new word building on the growing context of all the words that came before it.

Step 6: Knowing When to Stop

The loop doesn’t go on forever. It stops when one of two things happens:

- The model generates a special

<end of text>token, which it has learned to produce when a response feels complete. - It hits a predefined word limit to prevent extremely long and potentially costly outputs.

And just like that, in a matter of seconds and after thousands of these iterative predictions, a full, coherent, and (hopefully) helpful response appears on your screen.

Addressing the Elephant in the Room: Your Burning Questions

This process naturally raises some questions. Let’s tackle the most common ones.

If it’s just predicting the next word, how can it be creative?

This is the biggest mind-bender. Creativity, in many ways, is the recombination of existing ideas in novel ways. The AI has ingested the collective creativity of humanity—every poem, every story, every joke, every scientific theory. When you ask it to write a sonnet about pizza, it’s not inventing a new form of poetry; it’s recombining the structural patterns of a sonnet with the thematic elements of pizza, guided by the probabilities it has learned. The “random roll of the dice” in the sampling step allows for unexpected and novel combinations that can feel genuinely creative.

Why does it sometimes make up facts (“hallucinate”)?

This is a direct consequence of its design. Remember, the AI is a text completer, not a truth-teller. Its primary goal is to generate statistically plausible text, not factually accurate statements. If the most likely completion, based on its training data, is a statement that sounds authoritative but is factually incorrect, it will generate that. It has no ground-truth database to check against. It’s essentially a brilliant bullshitter, which is why fact-checking its output is absolutely critical.

What’s the difference between GPT, Claude, and Gemini?

They are all built on the Transformer architecture, so the core mechanics we’ve discussed are the same. The differences come down to:

- Training Data: The unique mix of text they were trained on shapes their knowledge and “personality.”

- Fine-Tuning: After initial training, models are further refined (fine-tuned) with human feedback to be more helpful, harmless, and honest. This process, called Reinforcement Learning from Human Feedback (RLHF), is where companies instill their specific values and safety measures.

- Architectural Tweaks: Each company has its own secret sauce and optimizations on top of the core Transformer.

Think of it like different chefs using the same fundamental recipe but different ingredients and seasoning, resulting in unique dishes.

Conclusion: The Magic is Math

So, the next time you see an AI generate text, you’ll know the secret. There’s no tiny author, no conscious mind. Instead, there’s an incredibly sophisticated pattern-matching engine, built on a revolutionary Transformer brain, that learned language by playing trillions of games of fill-in-the-blank.

The “magic” is, in reality, the breathtaking power of mathematics, data, and computational scale. It’s a tool that mirrors our own language back at us, revealing both the stunning potential of this technology and the profound complexities of the human communication it seeks to emulate.

Understanding how it works is the first step toward using it effectively, critically, and responsibly. Now go forth, and prompt with confidence!

What’s the most surprising or cleverest thing you’ve seen an AI generate? Share your story in the comments below!