Let’s be honest: the last year has felt like living inside a science fiction novel when it comes to AI. Headlines scream about groundbreaking models, mind-bending generative capabilities, and a future where code writes itself. It’s electric, it’s transformative, and for many engineering teams, it’s also creating a massive, messy headache.

Because here’s the dirty little secret of the AI revolution that no one talks about at the glitzy launch events: Getting an AI model to work in a demo is one thing. Getting it to work reliably, safely, and continuously in the real world is a whole other beast.

This is the “after-code” gap. It’s the sprawling, complex wilderness between a trained model and a production system that actually delivers value. And it’s where billion-dollar companies are built.

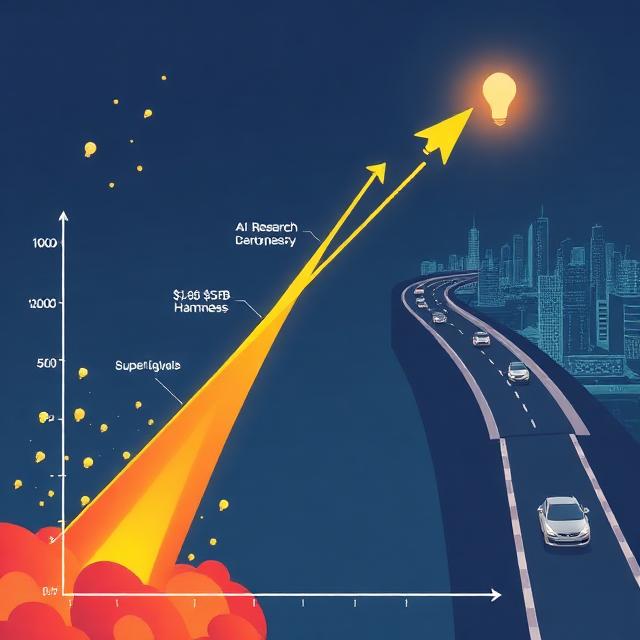

Enter Harness, a familiar name in the DevOps world, which just catapulted into the AI stratosphere with a $240 million funding round at a staggering $5.5 billion valuation. This isn’t just another funding announcement; it’s a loud, resonating bell that the industry is recognizing a critical problem. The race to automate the AI lifecycle has officially begun.

What Exactly is the “After-Code” Gap? (It’s Where Dreams Go to Die)

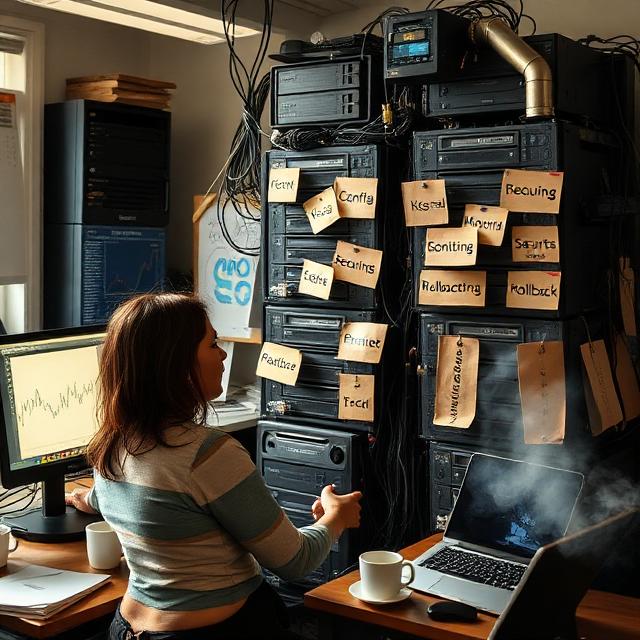

Imagine you’re a data scientist. You’ve spent months building a brilliant model to predict customer churn, generate marketing copy, or detect fraud. You’ve fine-tuned it, tested it, and the metrics look great. You proudly hand it off to the engineering team to deploy. This is where the handoff often turns into a fumble.

The engineering team looks at your model and asks:

- How do we package this and its massive dependencies?

- Where do we run it? On what infrastructure?

- How do we monitor its performance when real, messy data hits it?

- How do we know if its predictions are still accurate next month?

- How do we roll it back if something goes horribly wrong?

- How do we govern it for compliance and security?

Suddenly, that brilliant model is stuck in what’s been called “model purgatory” or the “last mile of AI.” This gap is filled with manual, repetitive, and brittle processes. It’s the reason so many AI projects—some estimates suggest over 80%—never make it to production.

As Jyoti Bansal, CEO and co-founder of Harness, put it in the announcement, “Everyone’s talking about building AI, but nobody’s talking about running it.” He’s right. We’ve been obsessed with the “create” button and forgotten about the “operate” manual.

From CI/CD Pioneer to AI Lifecycle Conductor

Harness didn’t just stumble into this problem. They’ve spent years building a reputation as a leader in automating traditional software delivery—the CI/CD (Continuous Integration/Continuous Deployment) pipeline. They helped companies move fast without breaking things, automating testing, deployment, and cloud cost management.

With this new funding, led by venture giants like Menlo Ventures and Lux Capital, Harness is making a bold pivot. They’re applying their automation DNA to the entire AI lifecycle, essentially building a CI/CD pipeline for machine learning.

Their platform, now supercharged, aims to tackle the entire journey:

- Continuous Training: Automatically retraining models when data drifts or performance decays. Your model shouldn’t get worse over time; it should learn and adapt.

- Continuous Evaluation: Constantly testing models against key metrics, shadow deployments, and champion/challenger scenarios. Is the new model actually better than the old one?

- Continuous Integration for AI: Automating the packaging, validation, and staging of model artifacts. Think of it as a rigorously inspected shipping container for your AI.

- Continuous Deployment & Delivery: Safe, progressive rollouts (canary deployments, blue-green) with instant rollback capabilities. No more “big bang” deployments that keep everyone up at night.

- Continuous Monitoring & Governance: Watching for model bias, data skew, performance drops, and ensuring compliance logs are automatic. This is the guardrail for responsible AI.

By automating this “after-code” pipeline, Harness promises to shrink deployment cycles from months to days, ensure models remain accurate and fair, and free up data scientists and ML engineers to do what they do best: innovate.

Why This Funding is a Bellwether for the Entire Industry

A $5.5 billion valuation is eye-watering. But it’s a signal, not an anomaly. It tells us three crucial things about the state of AI:

1. The Focus is Shifting from Creation to Operation. The initial wave of AI investment flooded into model builders (OpenAI, Anthropic) and chipmakers (NVIDIA). Now, the smart money is following the pain. The pain is in production. As Forbes notes, the operational complexity is the primary barrier. Investors are betting that the companies that solve operationalization will be the true power brokers.

2. Platformization is Inevitable. The early days of any tech wave are fragmented. Data scientists stitch together open-source tools (MLflow, Kubeflow) with cloud services. It’s the “DIY” phase. But as the market matures, companies crave an integrated, enterprise-grade platform. They want a single pane of glass, not a dozen disconnected tools. Harness is positioning itself as that unified platform.

3. Enterprise Adoption Demands Safety Nets. Big banks, healthcare providers, and retail giants can’t afford rogue AI. They need audit trails, security protocols, and reliability guarantees. Harness’s deep roots in enterprise DevOps give it a credibility advantage in selling to these cautious, deep-pocketed customers. As an analyst from Lux Capital remarked, this is about “bringing rigor and reliability to the wild west of AI deployment.”

The Human Impact: What This Means for Teams on the Ground

Beyond the billion-dollar valuations, what does this actually change for the people building AI?

- For Data Scientists: It means less time being a “full-stack ML engineer” wrestling with Kubernetes and Docker, and more time doing actual data science. It means your models have a clear, reliable path to impact.

- For DevOps/Platform Engineers: It provides a familiar framework (CI/CD) extended into a new domain. You can apply your existing knowledge of safe deployment practices to the new world of models.

- For Business Leaders: It de-risks AI investment. It turns AI from a series of exciting experiments into a reliable, scalable factory for intelligent features. It directly answers the CFO’s question: “What’s the ROI on this AI project?”

The Road Ahead: Challenges and the Competitive Landscape

The path to a $5.5 billion valuation is paved with challenges. Harness isn’t alone in seeing this opportunity.

- Cloud Giants: AWS (Sagemaker), Google Cloud (Vertex AI), and Microsoft Azure already offer extensive MLOps tooling. Harness must convince companies that it’s better as a neutral, multi-cloud platform.

- Specialized Startups: Companies like Weights & Biases (experiment tracking), Tecton (feature stores), and Databricks (lakehouse) are strong players in specific parts of the ML pipeline. Integration and competition will be fierce.

- The Complexity Beast: Automating the AI lifecycle is arguably harder than traditional software. Models are non-deterministic, dependent on data pipelines, and require nuanced evaluation. The platform that makes this truly simple will win.

Conclusion: Building the Bridge to AI’s True Potential

The story of Harness’s massive funding is more than a financial win. It’s a narrative turning point for artificial intelligence.

We’ve been marveling at the spark of creation—the GPTs, the DALL-Es, the code generators. But sparks alone don’t power cities. You need transmission lines, substations, and safety grids. You need infrastructure.

Harness is betting its future on becoming the indispensable infrastructure for the AI era. They are building the bridge over the treacherous “after-code” gap. A bridge that allows the incredible ideas born in Jupyter notebooks to travel safely and efficiently into the applications we use every day.

This $5.5 billion valuation is a vote of confidence that the next chapter of AI won’t be written by those who build the smartest models alone, but by those who can operate them at scale. The automation of AI’s last mile has begun, and it just became a multi-billion-dollar race.

The age of AI as a science project is over. Welcome to the age of AI as a reliable, operational powerhouse. And the tools that make it possible are now the industry’s most coveted prize.

FAQ: Harness, AI’s “After-Code” Gap, and the $5.5B Valuation

Q1: What does Harness actually do?

At its core, Harness is an Intelligent Software Delivery Platform. Originally famous for automating traditional CI/CD pipelines (the process of building, testing, and deploying code), it has now pivoted heavily to automate the entire Machine Learning (ML) or AI lifecycle. Think of it as an “operating system” for getting AI models out of the lab and reliably into production applications, and then keeping them healthy and accurate over time.

Q2: What is the “after-code gap” or “last mile of AI”?

This is the critical, often-overlooked phase that comes after a data scientist builds and trains an AI model. It involves all the complex, manual work needed to deploy, monitor, manage, and update that model in a live environment. This gap includes tasks like model packaging, deployment orchestration, performance monitoring, detecting data drift, ensuring governance, and enabling safe rollbacks. It’s where most AI projects fail due to operational complexity.

Q3: Why is this problem worth a $5.5 billion valuation?

Investors are recognizing that while creating AI models is getting easier (thanks to tools like ChatGPT API), operationalizing AI at scale is the next trillion-dollar challenge. As companies rush to implement AI, they are hitting a wall of operational overhead, risk, and cost. The platform that solves this—making AI safe, reliable, and scalable for enterprises—becomes mission-critical infrastructure. Harness’s valuation reflects the massive market need and its first-mover advantage in applying mature DevOps principles to this new space.

Q4: How is Harness different from cloud providers like AWS SageMaker or Google Vertex AI?

Great question. While AWS, Google, and Azure offer robust MLOps toolkits, they are often a collection of services that require integration and are native to their own cloud. Harness positions itself as a unified, multi-cloud, and agnostic platform. It can work with models and infrastructure across any cloud or on-premises data center. More importantly, it focuses on end-to-end automation and orchestration of the entire lifecycle, applying proven CI/CD practices in a cohesive way that many enterprises are already familiar with from their software teams.

Q5: Who is the main user of a platform like Harness?

It serves two primary users:

- MLOps/Platform Engineers: They use it to build standardized, secure, and automated pipelines for deploying and monitoring AI models. They set the guardrails and infrastructure.

- Data Scientists & ML Engineers: They use it to seamlessly push their models into production, monitor their performance, and trigger retraining—all with minimal manual overhead, allowing them to focus on building better models.

Q6: Isn’t this just MLOps? Why the new terminology?

Yes, the field is broadly known as MLOps (Machine Learning Operations). Harness is using terms like “after-code gap” to vividly describe the specific pain point within MLOps that they are automating. It’s a marketing and conceptual framing to make a complex problem easily understandable to business leaders and engineers alike, emphasizing the “gap” between creation and value.

Q7: What does this funding mean for the average software development team?

Even if your team isn’t building AI models today, this trend signals a major shift. First, AI-powered features will become easier and safer to integrate into your applications. Second, the principles of hyper-automation that Harness champions for AI will continue to bleed into traditional software delivery, raising the bar for efficiency, safety, and observability across the board.

Q8: What are the biggest challenges Harness faces?

- Competition: They’re up against deep-pocketed cloud giants and nimble, specialized startups.

- Complexity: Automating the non-deterministic nature of AI (where a model’s behavior can change with new data) is harder than automating traditional software deployment.

- Education: They need to convince enterprises that a unified platform is better than building a custom toolkit from various point solutions.

Q9: Where can I learn more about MLOps and the “after-code” challenge?

- Explore the MLOps community on platforms like MLOps.community

- Read the seminal paper on Hidden Technical Debt in Machine Learning Systems from Google researchers.

- Follow industry analysis from firms like Gartner and Forrester on AI adoption and operationalization trends.